Enacting Vulnerability: AI, Childhood, and the Co-construction of Vulnerable Data Subjects

Kick-off event by Paula Maria Helm & Delfina Sol Martinez Pandiani

- Date

- 21 May 2025

- Time

- 14:00 -16:00

- Location

- Oude Turfmarkt 145-147

- Room

- Sweelinck Room

In the age of AI-driven research, how do tools not only reveal vulnerability but actively construct it?

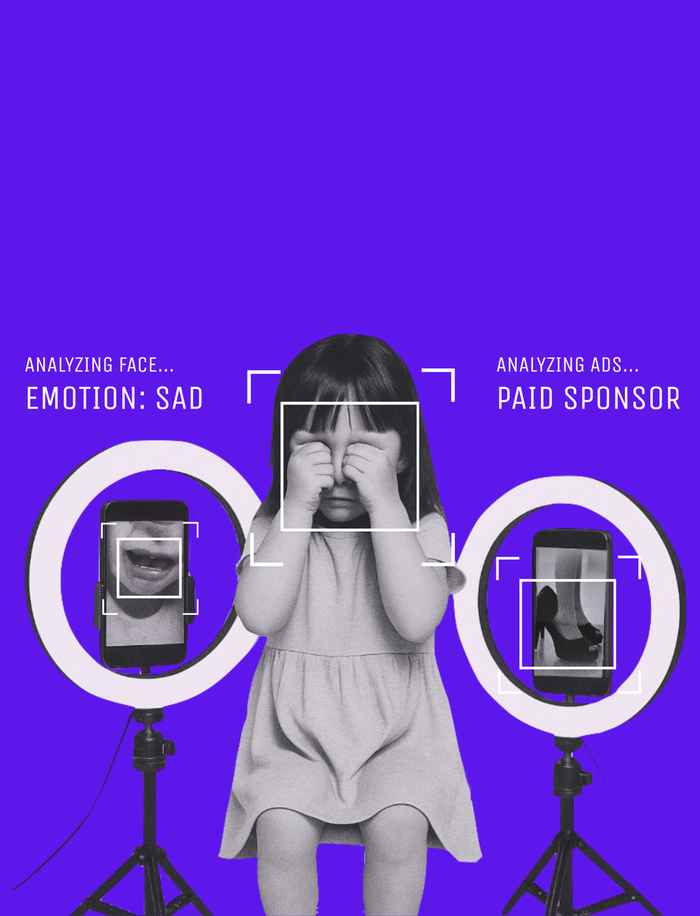

In today’s digital landscape, AI tools are being increasingly used to analyze publicly available content on platforms like YouTube, TikTok, and Instagram. But these tools do more than just observe—they actively shape what is visible, what is known, and, in doing so, they co-enact vulnerabilities. As these technologies probe deeper into the personal data of individuals— the lines between data analysis and the amplification and exploitation of vulnerability begin to blur. This event explores the ethical challenges of using AI to analyze publicly shared, monetized data, focusing on how these tools interact with and co-produce the vulnerabilities of data subjects, particularly children. Through a case study of childfluencers—children featured in family vlogs—we will examine how AI practices reconfigure vulnerabilities in digital spaces.

The Case Study: Childfluencers and Family Vlogs

To ground this discussion in a real-world example, we will focus on the phenomenon of childfluencers—children whose childhoods are publicly displayed in family vlogs, thereby turning them into sources of revenue creation. The children are subject to extensive exposure and scrutiny as part of their families’ online content. Their experiences can range from the joy of opening gifts or going on trips to the emotional trauma of publicizing deeply personal moments—such as the death of a pet.

Family vlogs are part of a global trend, with influencer families from all over the world capitalizing on their children's presence. However, as this monetized visibility grows, so too does the burden on these young individuals, often leading to a lack of privacy, emotional harm, and exposure to inappropriate audiences. By examining this case study, we will explore how the data generated by childfluencers—often analyzed by AI tools like facial recognition, sentiment analysis, and content recommendations—can shape and exacerbate their vulnerabilities.

Through this lens, we will explore 3 key questions:

- What is vulnerability in datafied contexts?

We will challenge the assumption that vulnerability is a fixed or inherent characteristic, and instead discuss how vulnerability emerges as a result of platform dynamics, publicness, and the choices researchers make in analyzing and interpreting data. We will explore how vulnerability is enacted as a relational and situated state of data subjects.

- How do AI tools amplify or mitigate the vulnerabilities of data subjects?

We will examine how AI methods like facial recognition and affective computing don’t just detect vulnerability but actively reshape it by making certain bodies and behaviors visible or risky. By analyzing key moments in the technical pipeline where ethical decisions are made, we can explore how these intersect with broader systems of platform surveillance and data extraction.

- How can data science researchers ethically navigate vulnerability in their work?

We will explore how ethical decision-making can be built into everyday data research practices, providing tools to help researchers reflect on the impact of their choices. We will discuss creating a tool, like a gamified card deck or decision tree, to help researchers navigate ethical tensions and consider how their choices affect the vulnerability of data subjects.

Interactive Discussion and Audience Participation

This event will include discussions and opportunities for audience participation. Attendees will take part in live polls and reflection activities, where they can explore the ethical implications of using AI tools on publicly shared, monetized content. These interactive elements will encourage participants to reflect on their own values, navigate the ethical tensions in research practices, and critically reflect on how knowledge practices co-configure vulnerabilities.

Why This Matters

The ethics of AI and data analysis are central to many contemporary debates both in tech and academia. By focusing on childfluencers as a case study, this lecture highlights not only the practical challenges of using AI with the necessary care but also how research influences data subjects.

Ultimately, we aim to forge a reconceptualization of vulnerability in data science as enacted, contextual, and ethically dynamic—through this, we seek to offer both conceptual complexity and practical tools for navigating that complexity.